哋它亢 :Exploring the feasibility of training-free approaches

哋它亢 Abstract

哋它亢 读文章:在这篇文章中,作者利用RAPID method [6],or “Training-free Retrieval-based Log Anomaly Detection with PLM (Pre-Trained Language Models) considering Token-level information”

Main research

- Adapting the RAPID method to a log dataset provided by Ericsson.

- Implementing a baseline method.

- Exploring the value of model fine-tuning.

- Developing and comparing multiple approaches.

哋它亢 Background

Challenges in Log Anomaly Detection

- Data Representation: Logs often contain a mixture of diverse event types, unstructured messages, and parameters. This complexity makes pre-processing logs quite complicated. Traditional methods rely heavily on manual feature extraction, which is not scalable

- Class Imbalance: Anomalous events in log data occur far less frequently than normal ones.This imbalance can lead neural networks to prioritize learning the more frequent class, remaining unable to detect the rarer anomalies

- Label Availability: In real-world applications, it is extremely rare to find labeled datasets, especially large enough to successfully train a supervised machine learning model. For this reason, many approaches fall into the semisupervised or unsupervised categories, which rely on the assumption that anomalies are rare and different from normal data.

Stream processing:Logs are normally produced in a continuous stream, requiring anomaly detection models to have quick inference times and necessitating single-pass data processing. Models need to balance accuracy with computational efficiency to be practical in real-time environments.- Evolution of Logging Statements: Since developers are constantly modifying the codebase, logging statements can change frequently, forcing anomaly detection techniques to be adaptable. This requires models that can generalize well from past data and quickly adapt to new patterns

哋它亢 谈相关技术

- Log Anomaly Detection

- Machine Learning

- 基本处理方式:Feature extracting -> Learning Algorithms

- 架构:Transformer-based Architectures

- 类型:Transfer Learning

- Knowledge Distillation

- Evaluation Metrics:用于效果评估

哋它亢 Method

RAPID framework

- Database construction

- RAPID processing

- CoreSet creation

- Similarity Measures

- Threshold Function

- Final Prediction

Adaptation to the Ericsson Dataset

- Pre-processing

- MLM Fine-tuning

- Other Fine-tuning Approaches

Experiment and Result

Baseline

- classic Naive Bayes classification model:利用朴素贝叶斯方法处理数据区分异常与正常

- BoW(词袋):将日志转换为稀疏矩阵的形式储存

Experiment Setup

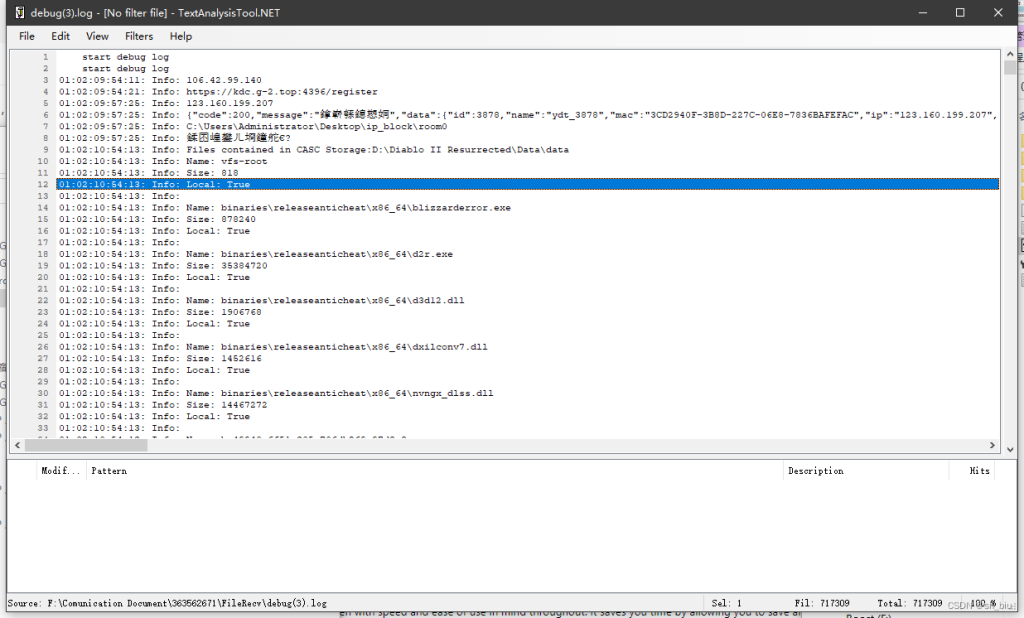

- 数据集:the publicly available BGL dataset, and proprietary log data from Ericsson

- 实验平台:

- Nvidia A2

- BERT, DistilBERT模型

- CUDA 12.2

- 15GB 内存

- 参数:<略>

- 实验方法:

- PLM (Pre-Trained Language Models)

- MLM(Masked Language Modeling)

- Baseline

实验结果

- BERT vs DistilBERT

- Different apporachs

- Human vs ML

Related work

介绍了基于 LSTM 的方法(如 DeepLOG 和 LogRobust)以及基于 Transformer 和 BERT 的方法(如 LogSy、LogBERT 和 LAnoBERT)

欢迎查看社团的主页了解更多详细信息:关于我们-哋它亢